For most of my life, I struggled with the assumption that people with letters after their name were not only smarter, more powerful, and more successful than me, but that the research they create is gospel. I’m not sure when or how this seed was planted, but it’s lead to a lifelong feeling of inadequacy—especially throughout my twenties. Doctors and scientists were busy saving lives and stumbling across eureka. Meanwhile, I made silly cupcakes for a living and couldn’t afford health insurance.

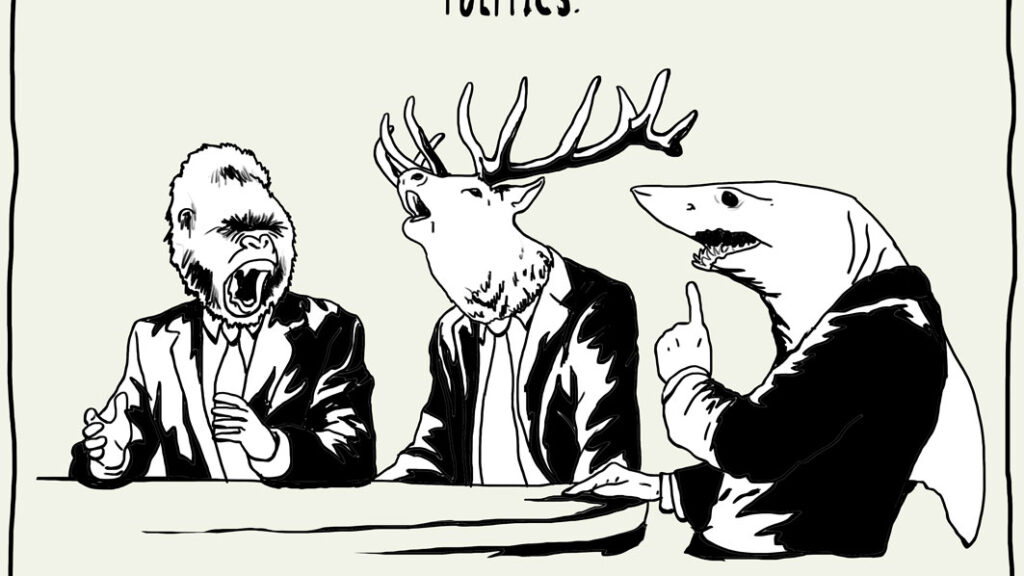

Assuming that all doctors and research belonged on a pedestal is also part of why I so easily accepted their mental health diagnosis. I knew I was depressed, but what did I know about how to fix it? A doctor told me that my brain was broken and that the pills I was taking did not have any major side effects. Who was I to question someone who spent 12 years learning how to identify and treat my exact problem? It is only since getting off the antidepressants that I’ve begun to understand how complicated, political, and often corrupt the medical and research system actually is. And this isn’t conspiracy. Bad science exists in every discipline—The Guardian even has an entire vertical dedicated to it.

While researchers are adept at sorting out bad science from the good, regular folk rarely know the difference, which can lead to a plethora of misinformation and ill-informed opinion. But I’ve learned a few basic strategies to help us plebians suss out the good from bad when it comes to mental health research. This is by no means a foolproof or comprehensive list, but it’s a start.

Where to find research papers:

PubMed is a free search engine that primarily accesses the MEDLINE (Medical Literature Analysis and Retrieval System Online) database of research on life science and medical topics. It allows you to sort by a variety of matches, including author, publication date, and journal. It also has a nifty search feature that will only give you results that include free full text. Unfortunately, the full text of many research papers are hidden behind paywalls, which leaves the average person stuck with nothing but abstracts.

Google Scholar is…well, the Google of research. Whether you’re looking for research on antidepressants or conifer trees, Google Scholar is the grand poobah of scientific information. However, because Google Scholar is a search engine and not a subject-dedicated database (like PubMed), Google Scholar strives to include as many journals as possible, including junk journals and predatory journals. These predatory journals are known for exploiting the academic publishing business model, not checking journal articles for quality, and pushing agenda even in clear cases of fraudulent science.

All this to say that before a paper is read, the reader needs to do a bit of due diligence to make sure that what they’re reading is legitimate. Even then, we can’t be 100% sure. Case in point: Andrew Wakefield’s fraudulent research claiming that vaccines cause autism.

I know, I know. The number one rule in research is: don’t use Wikipedia as a source. Any old geezer (including you) can log on to Wikipedia and change an entry (any entry) to say anything and everything, which means that Wikipedia is riddled with errors and should not be referenced as truth in a research paper or reported article. But since we’re not reporting for the New York Times, Wikipedia is a good place to start because of the references listed at the bottom of each Wikipedia entry. The Wikipedia page on Antidepressant Discontinuation Syndrome, for example, links directly to 27 different sources on the topic.

But sourcing research is only the first step. With so much junk science out in the world, it’s imperative to learn how to identify the good from the bad. Here’s how:

Check the Citations

Google Scholar is one of my favorite ways to source research, but because Google Scholar is a search engine and not a curated database, articles published in known predatory journals may pop up in your search results.

The quickest way to determine if the article is legit is to check the “Cited by” number at the bottom of the search. If an article has multiple citations, it means other researchers are referring to the research in their own articles, which indicates legitimacy. It’s rare that articles are cited thousands of times like Eugene Paykel’s excellent study “Life and Depression: A Controlled Study.” With 1495 citations, Paykel’s study is the research equivalent of a New York Times bestselling book. But according to academics, even mid-single digits are enough to assume the research isn’t bunk.

Journal Ranking

While citations are a great place to start, they benefit from time in the system. Paykel’s article has been around since 1976, which means it has nearly half a century of research built upon it. New research won’t come with shiny citations, so you need to look at the journal it’s published in to see if it’s legitimate.

Academic journals are ranked for impact and quality by a system known as the H-Index. The H-Index is determined by the number of publications and citations. Higher H-Index indicates a higher ranking. However, note that the H-Index is not standardized across subject areas, so you can’t cross-compare across disciplines.

Find journal rankings by googling the name of the journal and the word “ranking.” The Scimago Journal & Country Rank (SJR) should be one of the first Google results, and that will show you the H-Index of the journal in question.

For layman’s purposes, the H-Index doesn’t matter too much. Think of it like the college system. Harvard isn’t the same as Iowa State, but that doesn’t mean that Iowa State isn’t capable of producing good citizens (and we all know question marks who graduated from top-tier universities.) The top journals produce great work, but there is still plenty of meaningful work to be found in smaller journals. A low ranking isn’t necessarily a problem, but no ranking is a problem. Junk publications and predatory journals won’t have an H-Index, so if a publication you’re reading doesn’t have a rating, run far far away.

Crosscheck Beall’s List

If the journal article doesn’t appear on the SJR, your predatory journal spidey sense should go off. Cross-reference the journal against Beall’s List, an archive of predatory journals created by librarian Jeffrey Beall. The sheer number of journals listed on Beall’s List is astounding, and it’s easy to see how naive readers could be duped.

Need a little giggle? Order one of my Fuckit Buckets™.

After 15 years of depression and antidepressants, my mission is to help people find hope in the name of healing. My memoir on the subject, MAY CAUSE SIDE EFFECTS, publishes on September 6, 2022. Pre-order it on Barnes & Nobles, Amazon, or wherever books are sold. For the most up-to-date announcements, subscribe to my newsletter HAPPINESS IS A SKILL.

More articles from the blog

see all articles

October 28, 2022